Error Beneath the WAVs

This is a follow-up to Why I Ripped The Same CD 300 Times. By the end of that page I'd identified a fragment of audio data that could cause read errors even if it was isolated and burned to a fresh CD. Further testing yielded a "cursed WAV" that consistently prevents perfect rips on different brands of optical drive, ripping software, and operating system.

EDIT (2018-08-10): It worked! With the power of the two-sheep LTR-40125S I can successfully rip the original discs, with bit-exact audio data and a matching AccurateRip report.

🐻 превед! Arrived from IXBT? This is similar to the post «Магия чисел», but the source of errors is a little different. Please see the CDDA vs CD-ROM section.

“History became legend. Legend became myth.”

The root cause would have been forever mysterious and unknown to me, but for this Hacker News comment by userbinator

It is likely "weak sectors", the bane of copy protection decades ago and of which plenty of detailed articles used to exist on the 'net, but now I can find only a few:

http://ixbtlabs.com/articles2/magia-chisel/index.html

https://hydrogenaud.io/index.php/topic,50365.0.html

http://archive.li/rLugY

This page explores how "weak sectors" are caused by bad encoding logic in a CD burner. Probably what happened is the artist gave the factory a master on CD-R, which had been burned on a drive with affected firmware. The master contained the bad EFM encoding and was accurately duplicated into the pressed CDs.

TODO

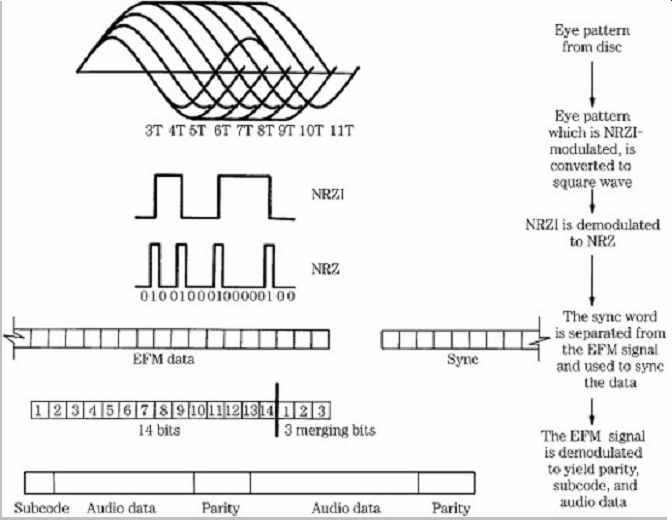

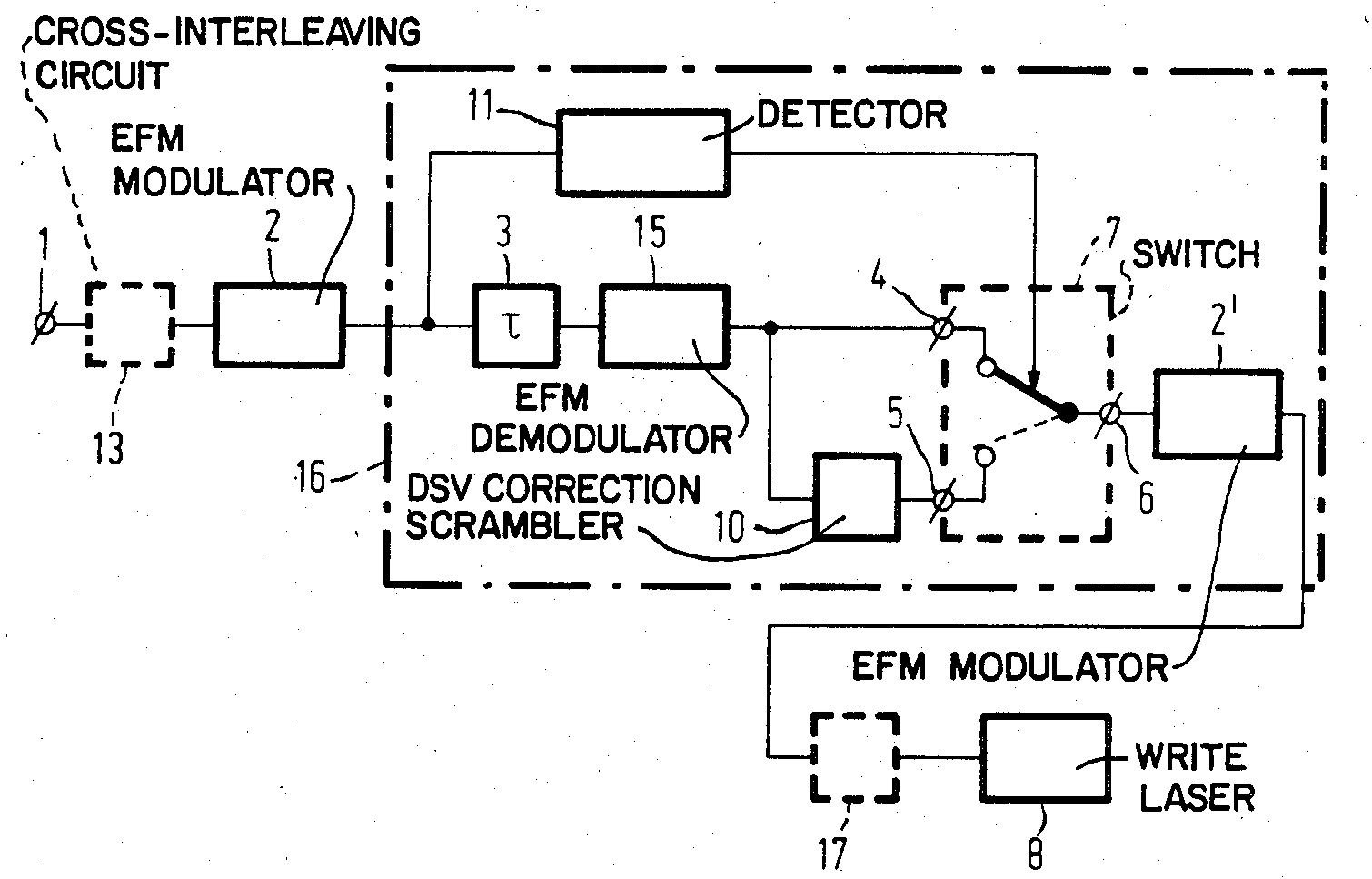

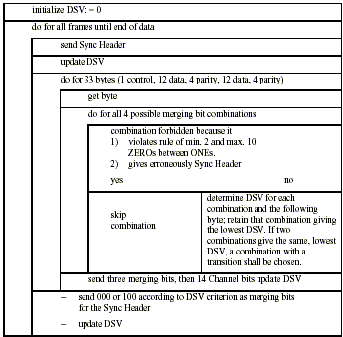

Physically a CD's data track is a spiral of "pits" and "lands", where at each clock cycle a transition is "1" and lack of transition is "0". Directly encoding data bytes in this format would cause some transitions to occur too quickly for a detector to track, so bytes are "stretched" to 14 bits using eight-to-fourteen modulation. The sequential "EFM codewords" are separated by three "merging bits", which are chosen by the writing device under two constraints:

- The bitstream may not have two consecutive 1s, or more than ten consecutive 0s.

- The bitstream should avoid "DC bias" by maintaing roughly equal counts of 1s and 0s.

It appears that in the ~15 years between the optical disc's invention and the spread of home burning, knowledge of the EFM modulator's role in reducing DC bias was lost.

US patent US06614934 (granted 1986-07-29):

In order to maintain at least the minimum run length when the channel bits of successive symbols are merged into a single channel bit stream, at least two additional "merging bits" are added to the channel bits for each symbol. As a result of this, however, the digital sum value (DSV) of the channel bits of successive symbols may become appreciable, [...]

It has been found that under certain conditions, despite the addition of merging bits to minimize the d.c. unbalance (or DSV) of the channel bits, the DSV may become sufficiently significant to adversely affect read-out of the channel bits.

Contrast with this quote from later material (circa 2002):

The CD-Reader has trouble reading CD's with a high DSV, because (Not sure about this info, this is just an idea from Pio2001, a trusted source), the pits return little light when they are read.

Come Sing Along With the Pirate Song

All of this would have remained an obscure detail of CD manufacturing until some Macrovision employee circa 2000 realized that consumer-grade CD burners didn't implement DSV scrambling. Instead of responsibly reporting defective hardware, they decided to build digital restrictions products around bit patterns known to trigger bad EFM modulation. The resulting data corruption could be used to detect pirated copies, with a minor side effect of preventing customers from using legally purchased software.

The piracy scene named these difficult-to-modulate bit patterns "weak sectors".

userbinator's comment links to http://sirdavidguy.coolfreepages.com/SafeDisc_2_Technical_Info.html, which contains a concrete example of a "weak sector" pattern:

Feeding a regular bit pattern into the EFM encoder can cause a situation in which the merging bits are not sufficient to keep the DSV low. For example, if the EFM encoder were fed with the bit pattern "D9 04 D9 04 D9 04 D9 04 D9 04 D9 04 D9 04 D9 04 D9 04 D9 04 D9 04" [...]

It also speculates that correct EFM modulation was abandoned due to performance concerns:

The algorithm for calculating the merging bits is far too slow to be viable in an actual CD-Burner. Therefore, CD designers had to come up with their own algorithms, which are faster. The problem is, when confronted with the weak sectors, the algorithms cannot produce the correct the merging bits. This results in sectors filled with incorrect EFM-Codes. This means that every byte in the sector will be interpreted as a read error. The error correction is not nearly enough to correct every byte in the sector (obviously).

This seems plausible: the early 2000s was a time of fierce competition between optical drive vendors, and speed was king. A drive that could only write at 4x would lose sales to its 16x competitors, even if its output was technically more correct.

Counting Sheep

The quality of a CD drive's EFM algorithm was of mostly academic interest when it came to the general population

| Sheep Rating | Capability |

|---|---|

| 0 | Can't duplicate CDs containing weak sectors |

| 1 | Can duplicate CDs containing SafeDisc up to version 2.4.x |

| 2 | Can duplicate CDs containing SafeDisc up to version 2.5.x |

| 3 | Can duplicate CDs containing any possible weak sectors |

My next objective was to purchase a CD drive capable of burning and re-reading the original track. Such a drive would be able to rip the entire CD in one pass, thereby providing a clean rip log

The best resource I found is makeabackup.com/burners.html, which contains lists of optical drives categorized by sheep rating. The brand I found mentioned most commonly in archived piracy forums was Plextor, so I was surprised to see no Plextor drives on the 2-sheep list. Instead, I bought a Lite-On LTR-40125S

EDIT (2018-08-10): It worked! With the power of the two-sheep LTR-40125S I can successfully rip the original discs, with bit-exact audio data and a matching AccurateRip report.

I found several references to "three-sheep burners" as a semi-mythical achievement, but no concrete evidence of such a device ever being sold. It's possible that the Yamaha CRW3200 in "Audio Master Quality" mode might have been able to duplicate certain discs at a three-sheep level, by writing physically larger data tracks at the cost of reduced capacity

Making WAVs

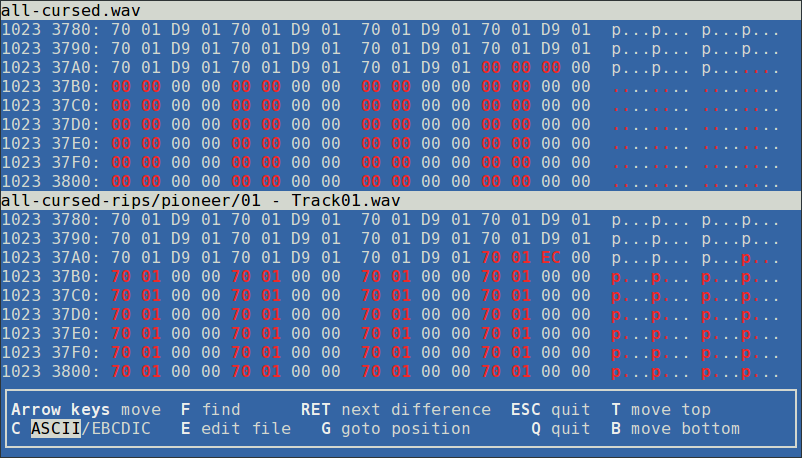

If you've been following along at home with your favorite hex editor, you'll notice that the "cursed" portion of the original audio

__ 0x04 __ 0x04 pattern. This matches the 0xD9 0x04 0xD9 0x04 sample above. Was there something special about 0x04? Was there a correlation between weak sectors and EFM patterns? To answer these questions I generated a synthetic test file, containing single CDDA frames full of a suspect pattern, joined by long runs of 0x00 padding

I wasn't able to figure out how to predict the behavior of a particular byte pattern. Of the patterns tested, many were harmless (or at least don't affect any of my drives). Stretches of identical bytes were also harmless, so it wasn't just repetition in play. Given the difficulty of measuring voltage levels in an optical drive's ICs, it's likely I'll never figure out exactly what causes particular patterns to cause errors.

import contextlib, wave

FRAME_BYTES = 2352

SILENCE = "\x00" * (FRAME_BYTES * 3)

with contextlib.closing(wave.open("all-cursed.wav", "w")) as out:

out.setnchannels(2)

out.setsampwidth(2)

out.setframerate(44100)

out.writeframes(SILENCE)

for b1 in range(0, 255):

for b2 in range(0, 255):

frame = "%s\x01%s\x01" % (chr(b1), chr(b2))

out.writeframes(frame * (FRAME_BYTES / len(frame)))

out.writeframes(SILENCE)

CDDA vs CD-ROM

The issue of repeated patterns causing silent data corruption appears to be specific to audio CDs (CDDA) – SafeDisc operated by detecting their presence, not by preventing the rip. However, silent data corruption could affect data CDs (CD-ROM) as documented by a forum post on IXBT (summaries: Russian, English) – specific sequences of bytes can be mistaken for the sector synchronization header, and cause portions of individual files on an optical disc to become unreadable.

Over 2 / 3 of the drives tested fail to read the file if it contains a signature that turns into a data sequence identical to Sync Header! Except Toshiba and HP, all manufacturers use sync header as a key sign of the sector start at data reading.

The behavior users see is a little different. For a data CD, the sequence must scramble to exactly match the sector sync header. In audio CDs, the responsible byte patterns must be repeated many times to drive the DSV value high enough to force read errors.

"Only a few" was an understatement. What looks to have been a flourishing community of must archivists and game pirates has nearly vanished from the Internet, losing years of reports and research about how CD error handling works in real-world conditions. This writeup was made possible by Internet Archive.

A typical high-end hard drive of that era might store around 100 GB of data. Music was typically ripped to MP3 or AAC; there was no point in worrying about the exact value of bits fed into a lossy encoder.

All of my personal CD rips are archived along with their rip log, so I can verify the audio CRCs during backup tests.

This thing's a blast from the past. The CD ripping scene imploded before SATA had reached optical drives, so it's got PATA pins and one of those four-pin Molex power sockets.

I found several reviews praising how AMQ discs skipped less often, but no reviews specifically about its capabilities with weak sectors. This is unsurprising given that the capacity differences would render it unusable for software piracy.

I say "original", but it turns out that's wrong at this level of detail. I used Audacity to cut out that section, because I assume it was capable of moving bytes from one lossless file to another without changing those bytes. Not so! Audacity will happily _mangle the shit_ out of audio data. You can verify this by opening a .wav, writing it back out, and comparing the two files. It's also fun to look at the spectrogram of an "empty" audio file that got passed through Audacity.

I also ran tests with other padding bytes, to determine that the corruptions were caused by inter-frame copying (vs dumb "zero out bad bytes" logic).

A variant wrote patterns to separate short tracks. Ripping these with the Lite-On in EAC reported "OK" CRCs for all of the tracks, despite being very obviously mangled. This is alarming behavior from a ripping program designed to detect corrupt data. I suspect there's an edge condition in the cache busting that doesn't work right when tracks are very short.